Abstract

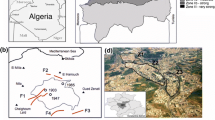

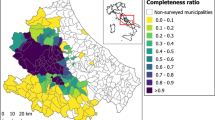

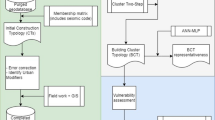

The estimation of the seismic vulnerability of buildings at an urban scale, a crucial element in any risk assessment, is an expensive, time-consuming, and complicated task, especially in moderate-to-low seismic hazard regions, where the mobilization of resources for the seismic evaluation is reduced, even if the hazard is not negligible. In this paper, we propose a way to perform a quick estimation using convenient, reliable building data that are readily available regionally instead of the information usually required by traditional methods. Using a dataset of existing buildings in Grenoble (France) with an EMS98 vulnerability classification and by means of two different data mining techniques—association rule learning and support vector machine—we developed seismic vulnerability proxies. These were applied to the whole France using basic information from national databases (census information) and data derived from the processing of satellite images and aerial photographs to produce a nationwide vulnerability map. This macroscale method to assess vulnerability is easily applicable in case of a paucity of information regarding the structural characteristics and constructional details of the building stock. The approach was validated with data acquired for the city of Nice, by comparison with the RiskUE method. Finally, damage estimations were compared with historic earthquakes that caused moderate-to-strong damage in France. We show that due to the evolution of vulnerability in cities, the number of seriously damaged buildings can be expected to double or triple if these historic earthquakes were to occur today.

Similar content being viewed by others

References

Agrawal R, Imieliński T, Swami A (1993) Mining association rules between sets of items in large databases. In: Proceedings of the 1993 ACM SIGMOD international conference on Management of data—SIGMOD ‘93, p 207. doi:10.1145/170035.17007

Bard PY, Duval AM, Bertrand E, Vassiliadès JF, Vidal S, Thibault C, Guyet B, Mèneroud JP, Gueguen P, Foin P, Dunand F, Bonnefoy-Claudet S, Vettori G (2005) Le risque Sismique à Nice: apport méthodologique. résultats et perspectives opérationnelles. Final technical report of the GEMGEP project. CETE-Méditerranée

Baroux E, Pino NA, Valensise G, Soctti O, Cushing ME (2003) Source parameters of the 11 June 1909. Lambesc (Provence. southeastern France) earthquake: a reappraisal based on macroseismic. seismological. and geodetic observations. J Geophys Res 108(B9):2454. doi:10.1029/2002JB002348

Borsi B, Dell’Acqua F, Faravelli M, Gamba P, Lisini G, Onida M, Polli D (2010) Vulnerability study on a large industrial area using satellite remotely sensed images. Bull Earthq Eng 9:675–690. doi:10.1007/s10518-010-9211_9

Boser BE, Guyon IM, Vapnik VN (1992) A training algorithm for optimal margin classifiers. In: Haussler D (ed) 5th annual ACM workshop on COLT. ACM Press, Pittsburgh, pp 144–152

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20:273–297

Duin RPW, Juszczak P, Paclik P, Pekalska E, de Ridder D, Tax DMJ, Verzakov S (2007) PRTools 4.1, a Matlab toolbox for pattern recognition. Delft University of Technology, Delft

Dunand F, Gueguen P (2012) Comparison between seismic and domestic risk in moderate seismic hazard prone region: the Grenoble City (France) test site. Nat Hazards Earth Syst Sci 12:511–526. doi:10.5194/nhess-12-511-2012

Geiss C, Taubenböck H (2012) Remote sensing contributing to asses earthquake risk/from a literature review towards a roadmap. Nat Hazards 68:7–48. doi:10.1007/s11069-012-0322-2

Grunthal G, Levret A (2001) L’échelle macrosismique européenne. European macroseismic scale 1998 (EMS-98) Conseil de l’Europe—Cahiers du Centre Européen de Géodynamique et de Séismologie, vol 19

Gruppo Nazionale per la Difensa dai Terremoti, Roma (GNDT) (1993) Rischio Sismico di Edifici Pubblici. Consiglio Nazionale delle Richerche

Gueguen P (2013) Seismic vulnerability of structures. Civil engineering and geomechanics series. ISTE Ltd and Wiley, London. ISBN 978-1-84821-524-5. Edited by Philippe Gueguen

Gueguen P, Michel C, LeCorre L (2007) A simplified approach for vulnerability assessment in moderate-to-low seismic hazard regions: application to Grenoble (France). Bull Earthq Eng 2007(5):467–490. doi:10.1007/s10518-007-9036-3

Hamaina R, Leduc T, Moreau G (2012) Towards urban fabrics characterization based on building footprints. In: Gensel J et al (eds) Bridging the geographic information sciences, lecture notes in geoinformation and cartography. doi:10.1007/978-3-642-29063_18

HAZUS (1997) Earthquake loss estimation methodology, Hazus technical manuals. National Institute of Building Science, Federal Emergency Management Agency (FEMA), Washington

Jackson J (2006) Fatal attraction: living with earthquakes. The growth of villages into megacities and earthquake vulnerability in the modern world. Philos Trans R Soc 364(1845):1911–1925

Kappos AJ, Panagopoulos G, Panagiotopoulos C, Penelis G (2006) A hybrid method for the vulnerability assessment of R/C and URM buildings. Bull Earthq Eng 4(4):391–413

Lagomarsino S, Giovinazzi S (2006) Macroseismic and mechanical models for the vulnerability and damage assessment of current buildings. Bull Earthq Eng 2006(4):415–443. doi:10.1007/s10518-006-9024-z

Lambert J (1997) Les tremblements de terre en France: hier, aujourd’hui, demain. BRGM Eds. Orléans, France

Michel C, Guéguen P, Causse M (2012) Seismic vulnerability assessment to slight damage based on experimental modal parameters. Earthq Eng Struct Dyn 41(1):81–98. doi:10.1002/eqe.1119

Pierre J-P, Montagne M (2004) The 20 April 2002, Mw 5.0 Au Sable Forks, New York, earthquake: a supplementary source of knowledge on earthquake damage to lifelines and buildings in Eastern North America. Seismol Res Lett 75(5):626–635

Riedel I, Gueguen P, Dunand F, Cottaz S (2014) Macro-scale vulnerability assessment of cities using association rule learning. Seismol Res Lett 85(2):295–305. doi:10.1785/0220130148

Rothé J-P, Vitart M (1969) Le séisme d’Arette et la séismicité des Pyrénées. 94e Congrès national des sociétés savantes, Pau, sciences, t. II, pp 305–319

Scotti O, Baumont D, Quenet G, Levret A (2004) The French macroseismic database SISFRANCE: objectives, results and perspectives. Ann Geophys 47(2/3):571–581. doi:10.4401/ag-3323

Spence R, Lebrun B (2006) Earthquake scenarios for European cities: the risk-UE project. Bull Earthq Eng 4 special issue

Spence R, So E, Jenny S, Castella H, Ewald M, Booth E (2008) The global earthquake vulnerability estimation system (GEVES): an approach for earthquake risk assessment for insurance applications. Bull Earthq Eng 6:463–483. doi:10.1007/s10518-008-9072-7

Spence R, Foulser-Piggott R, Pomonis A, Crowley H, Guéguen P, Masi A, Chiauzzi L, Zuccaro G, Cacace F, Zulfikar C, Markus M, Schaefer D, Sousa ML, Kappos A (2012) The European building stock inventory: creating and validating a uniform database for earthquake risk modeling and validating a uniform database for earthquake risk modeling risk modeling. In: The 15th world conference on earthquake engineering, Sept 2012, Lisbon, Portugal

Teukolsky SA, Vetterling W, Flannery BP (2007) Section 16.5. Support vector machines. Numerical recipes: the art of scientific computing, 3rd edn. Cambridge University Press, New York. ISBN 978-0-521-88068-8

Wald DJ, Quitoriano V, Heaton TH, Kanamori H, Scrivner CW, Worden CB (1999) Trinet “shakes maps”: rapid generation of peak ground motion and intensity maps for earthquakes in Southern California. Earthq Spectra 15(3):537–555

Worden CB, Wald DJ, Allen TI, Lin K, Garcia D, Cua G (2010) A revised ground motion and intensity interpolation scheme for shakemap. Bull Seismol Soc Am 100(6):3083–3096

Acknowledgments

This work was supported by the French Research Agency (ANR). Ismaël Riedel is funded by the MAIF Foundation. INSEE data were prepared and provided by the Centre Maurice Halbwachs (CMH).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Support vector machine definitions (SVM)

For the sake of simplicity, a formal definition of the linear binary case is first presented. The nonlinear case (still binary) is then studied. At last, the multiclass case is considered (n-class classification problem). Definitions are built following Teukolsky et al. (2007) and Cortes and Vapnik (1995).

1.2 Linear classification

Before entering into the mathematical definitions, a qualitative graphical description will help understanding the basic foundation of the method. Given some data points belonging to one of two classes (binary problem), viewed as p-dimensional vectors (a list of p numbers) for SVM, many planes might exist that classify the data (Fig. 17). Intuitively, a good separation is achieved by the plane that has the largest distance to the nearest training data point of any class (so-called functional margin), since in general the larger the margin is, the lower the generalization error of the classifier. Therefore, the basic idea is to choose the plane so that the distance from it to the nearest data point on each side is maximized.

Given some training data \( D \), a set of points of the form

where the \( y_{i} \) is either 1 or −1, indicating the class to which the point \( \varvec{x}_{i} \) belongs. Each \( \varvec{x}_{i} \) is a p-dimensional real vector. We want to find the maximum-margin hyperplane that divides the points having \( y_{i } = 1 \) from those having \( y_{i} = - 1 \). Any hyperplane can be written as the set of points \( \varvec{x} \) satisfying

where · denotes the dot product and \( \varvec{w} \) the normal vector to the hyperplane. The parameter \( \frac{b}{{\left\| \varvec{w} \right\|}} \) determines the offset of the hyperplane from the origin along the normal vector \( \varvec{w} \) (Fig. 18). If the training data are linearly separable, we can select two hyperplanes in a way that they separate the data and there are no points between them, and then try to maximize their distance. The region bounded by them is called “the margin.” These hyperplanes can be described by the equations (see Fig. 18)

By using geometry, we find the distance between these two hyperplanes is \( \frac{2}{{\left\| \varvec{w} \right\|}} \), so we need to minimize \( \left\| \varvec{w} \right\| \). As we also have to prevent data points from falling into the margin, we add the following constraint: for each \( i \) either

This can be rewritten as

The optimization problem is then posed as:

To simplify the problem, it is possible to alter the equation by substituting \( \left\| \varvec{w} \right\| \), the norm of w, with \( \frac{1}{2}\left\| \varvec{w} \right\|^{2} \) without changing the solution (the minimum of the original and the modified equation has the same \( w \) and \( b \)). This is a quadratic programming optimization problem.

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equality constraints.

By introducing Lagrange multipliers \( \varvec{\alpha} \), the previous constrained problem can be expressed as

This problem can now be solved by standard quadratic programming techniques and programs. The “stationary” Karush–Kuhn–Tucker condition implies that the solution can be expressed as a linear combination of the training vectors

Only a few \( \alpha_{i} \) will be greater than zero. The corresponding \( \varvec{x}_{i} \) is exactly the support vector that lies on the margin and satisfies

From this, we can derive that the support vectors also satisfy

which allows defining the offset \( b \). In practice, it is more robust to average over all support vectors \( N_{\text{sv}} \)

A modified maximum-margin idea was proposed, allowing for mislabelled examples. If there exists no hyperplane that can split the examples (some points may fall within the margins), the Soft Margin method will choose a hyperplane that splits the examples as cleanly as possible, while still maximizing the distance to the nearest cleanly split examples. The method introduces slack variables \( \zeta_{i} \), which measure the degree of misclassification of the data \( x_{i} \).

The optimization becomes a trade-off between a large margin and a small error penalty. The final equation leads to a quadratic programming solution. The membership decision rule is based on the sign function, and the classification is done by \( y_{\text{new}} = \text{sgn} \left( {\varvec{w} \cdot \varvec{x}_{\text{new}} + b} \right) \) where \( (\varvec{w}, b) \) are the hyperplane parameters found during the training process, and \( x_{\text{new}} \) is an unseen sample.

1.3 Nonlinear classification

In addition to performing linear classification, SVMs can efficiently perform nonlinear classification using what is called the kernel trick, implicitly mapping their inputs into high-dimensional feature spaces. For machine learning algorithms, the kernel trick is a way of mapping observations from a general set S into an inner product space V, in the hope that the observations will gain meaningful linear structure in V. Linear classifications in V are equivalent to generic classifications in S. The trick to avoid the explicit mapping is to use learning algorithms that only require dot products between the vectors in V, and choose the mapping such that these high-dimensional dot products can be computed within the original space, by means of a kernel function. The resulting algorithm is formally similar, and the maximum-margin hyperplane can be fitted in the transformed feature space. The transformation may be nonlinear, and the transformed space was high dimensional; therefore, even if the classifier is a hyperplane in the high-dimensional feature space, it may be nonlinear in the original input space (Fig. 19). There exist several choices of kernel function \( k \). The Kernel is related to the transform \( \phi (x_{i} ) \) by the equation \( k(\varvec{x}_{i} ,\varvec{x}_{j} ) = \phi (x_{i} ) \cdot \phi (x_{j} ) \).

Generally, the Gaussian kernel is a common good choice \( k(\varvec{x}_{i} ,\varvec{x}_{j} ) = \exp \left( { - \frac{1}{2} |\varvec{x}_{i} - \varvec{x}_{j} |^{2} /\sigma^{2} } \right) \), and it proved to give the best results in our study. Therefore, the classifications in this work are done using this kernel.

1.4 Multiclass SVM

Even if SVM are intrinsically binary classifiers, in practice several-classes classifications are usually of interest. Different multiclass classification strategies can be adopted, based on the binary analysis or the less used “all-together” method. The former is the dominant approach, which reduces the single multiclass problem into multiple binary classification problems and can be of the form (among others):

1.4.1 One versus all

Involves training N different binary classifiers, each one trained to distinguish the data in a single class from the data in all remaining classes. Classification of new instances is done by a winner-takes-all strategy, in which the classifier with the highest output function assigns the class.

1.4.2 One versus one

Builds binary classifiers that distinguish between every pair of classes. Classification is done by a max-wins voting strategy, in which every classifier assigns the instance to one of the two classes, then the vote for the assigned class is increased by one vote, and finally, the class with the most votes determines the instance classification. The one-versus-one classification proved to be more robust in the majority of cases, and showing the best results is the one selected in our study.

Rights and permissions

About this article

Cite this article

Riedel, I., Guéguen, P., Dalla Mura, M. et al. Seismic vulnerability assessment of urban environments in moderate-to-low seismic hazard regions using association rule learning and support vector machine methods. Nat Hazards 76, 1111–1141 (2015). https://doi.org/10.1007/s11069-014-1538-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11069-014-1538-0